India is on the cusp of a transformative AI revolution, driven by the ambitious IndiaAI initiative. This nationwide program aims to democratize access to cutting-edge AI services by building a scalable, high-performance AI Cloud to support academia, startups, government agencies, and research bodies. This AI Cloud will need to deliver on-demand AI compute, multi-tier networking, scalable storage, and end-to-end AI platform capabilities to a diverse user base with varying needs and technical sophistication.

At the heart of this transformation lies the management layer – the orchestration engine that ensures smooth provisioning, operational excellence, SLA enforcement, and seamless platform access. This is where aarna.ml GPU Cloud Management Software (GPU CMS) plays a crucial role. By enabling dynamic GPUaaS (GPU-as-a-Service), aarna.ml GPU CMS allows providers to manage multi-tenant GPU clouds with full automation, operational efficiency, and built-in compliance with IndiaAI requirements.

Key IndiaAI Requirements and aarna.ml GPU CMS Coverage

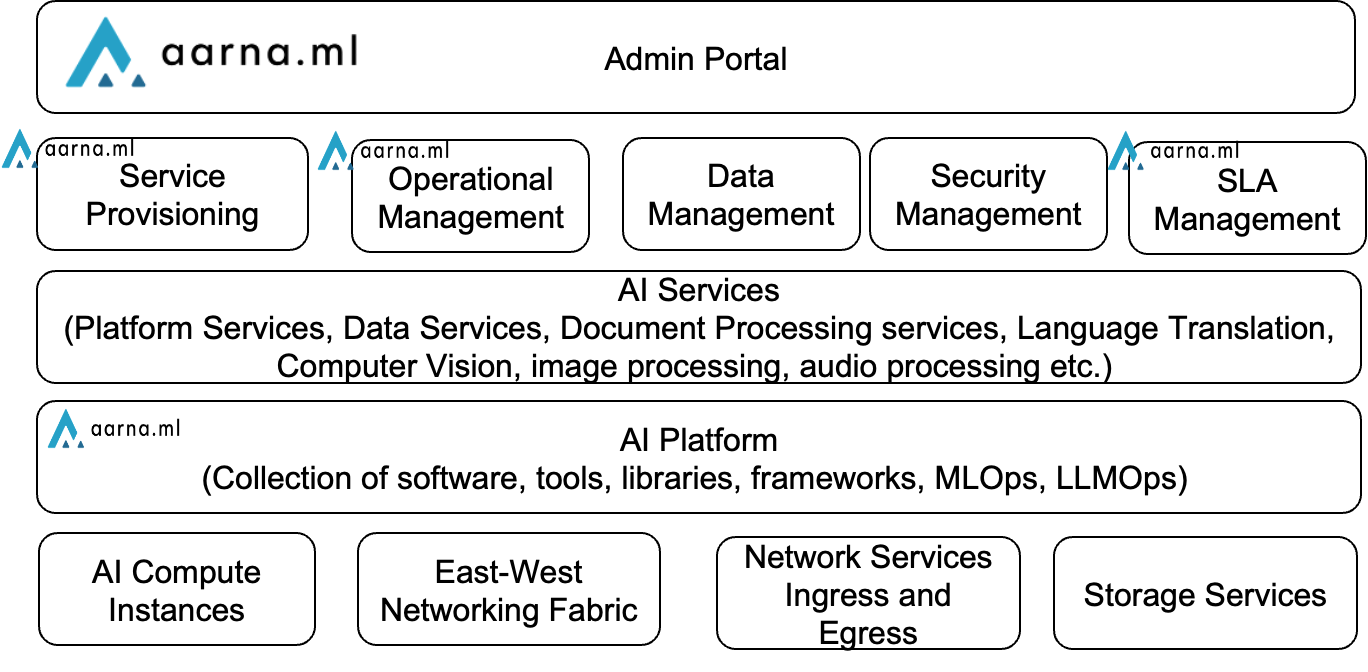

The IndiaAI tender defines a comprehensive set of requirements for delivering AI services on cloud. While the physical infrastructure—hardware, storage, and basic network layers—will come from hardware partners, aarna.ml GPU CMS focuses on the management, automation, and operational control layers. These are the areas where our platform directly aligns with IndiaAI’s expectations.

The diagram below illustrates the IndiaAI requirements and the components supported by aarna.ml GPU CMS.

Service Provisioning

aarna.ml GPU CMS automates the provisioning of GPU resources across bare-metal servers, virtual machines, and Kubernetes clusters. It supports self-service onboarding for tenants, allowing them to request and deploy compute instances through an intuitive portal or via APIs. This dynamic provisioning capability ensures optimal utilization of resources, avoiding underused static allocations.

Operational Management

The platform delivers end-to-end operational management, starting from infrastructure discovery and topology validation to real-time performance monitoring and automated issue resolution. Every step of the lifecycle—from tenant onboarding to resource allocation to decommissioning—is automated, ensuring that GPU resources are always used efficiently.

SLA Management

SLA enforcement is a critical part of the IndiaAI framework. aarna.ml GPU CMS continuously tracks service uptime, performance metrics, and event logs to ensure compliance with pre-defined SLAs. If an issue arises—such as a failed node, misconfiguration, or performance degradation—the self-healing mechanisms automatically trigger corrective actions, ensuring high availability with minimal manual intervention.

AI Platform Integration

IndiaAI expects the AI Cloud to offer end-to-end AI platforms with tools for model training, job submission, and model serving. aarna.ml GPU CMS integrates seamlessly with MLOps and LLMOps tools, enabling users to run AI workloads directly on provisioned infrastructure with full support for NVIDIA GPU Operator, CUDA environments, and NVIDIA AI Enterprise (NVAIE) software stack. Support for Kubernetes clusters, job schedulers like SLURM and Run:AI, and integration with tools like Jupyter and PyTorch make it easy to transition from development to production.

Tenant Isolation and Multi-Tenancy

A core requirement of IndiaAI is ensuring strict tenant isolation across compute, network, and storage layers. aarna.ml GPU CMS fully supports multi-tenancy, providing each tenant with isolated infrastructure resources, ensuring data privacy, performance consistency, and security. Network isolation (including InfiniBand partitioning), per-tenant storage mounts, and independent GPU allocation guarantee that each tenant’s environment operates independently.

Admin Portal

The Admin Portal consolidates all these capabilities into a single pane of glass, ensuring that infrastructure operators have centralized control while providing tenants with transparent self-service capabilities.

aarna.ml GPU CMS Compliance

Conclusion

The IndiaAI initiative requires a sophisticated orchestration platform to manage the complexities of multi-tenant GPU cloud environments. aarna.ml GPU CMS delivers exactly that—a robust, future-proof solution that combines dynamic provisioning, automated operations, self-healing infrastructure, and comprehensive SLA enforcement.

By seamlessly integrating with underlying hardware, networks, and AI platforms, aarna.ml GPU CMS empowers GPUaaS providers to meet the ambitious goals of IndiaAI, ensuring that AI compute resources are efficiently delivered to the researchers, startups, and government bodies driving India’s AI innovation.